Why you’re doing audio levels wrong, and why it really does matter

When I got my first job in public radio almost nine years ago, I was aware that I brought to the position a rare and valuable credential: a recent memory of what it was like to listen to public radio before working in it.

Alas, this precious resource, like so many others, proved nonrenewable. Within a year, I found myself asking the eternal internal question: “Do listeners actually care about [fill in the blank]?”

We try to answer such questions — by soliciting audience feedback, by its nature anecdotal, or by commissioning research, which is expensive and often poorly executed.

But there are moments of revelation when those limited modes of inquiry produce answers so conclusive and compelling that all attuned pubcasters perceive them subconsciously, like a great disturbance in the Force.

Such was the case recently when Current’s Ben Mook posted an NPR-produced chart, displaying in devastating clarity what we’ve all known: Some public radio content is WAY LOUDER than the rest. (Or, more problematically, way quieter, but that doesn’t render as well in ALL CAPS.)

Do listeners actually care? Anecdotal evidence surfaced when On the Media, which according to the chart boasts one of the burlier waveforms, tweeted a link to Mook’s article.

@onthemedia @Radiolab @snapjudgment The weirdest one is ATC, which can have enormous shifts in volume from story to story.

— Robert Ashley (@robertashley) June 6, 2014

@onthemedia @loueyville This is really interesting. I've been downloading TAM podcasts and honestly can't hear some of them.

— Will Lavender (@willlavender) June 6, 2014

@onthemedia @robertashley @Radiolab @snapjudgment I like to listen to podcasts in the shower.

This American Life is too quiet to hear.— Ian Tornay (@crash7800) June 6, 2014

https://twitter.com/Neural_Coil/statuses/474968342684385280

If you want research, we’ve got that, too. An NPR Labs study last year found that a sudden change of just 6 decibels within a program stream sent half of a study group of 40 listeners diving for their volume knobs. Bigger shifts annoyed them so much that they said they’d turn off the radio entirely.

It’s as if millions of voices cried out in terror and were suddenly silenced.

As a content creator, I’m actually made more nervous by the half of listeners who don’t fiddle with the radio when the level spikes or drops. Picture it: They’re listening in a car or train, a poorly mixed cut of phone tape comes on, and it sinks below the noise floor into oblivion.

Whoever spent their day getting and cutting that tape may as well have called in sick.

A (com)pressing issue

Any good reporter knows that if you want the truth, follow the money.

How do I know that climate change is real? Corporations are betting on it. The fossil fuel industry is investing billions in developing Arctic oil and gas reserves that will become accessible only if polar ice retreats at the rate that scientists expect.

How do I know that inconsistent levels are bad for ratings? Commercial radio stations compress their signals into undifferentiated candy bar-shaped waveforms that are equally loud and equally soft, all the time, always and forever. (Yes, I know my use of “loud” here is technically incorrect. I’ll get to that later, nerds.)

This dynamic range compression (not to be confused with data compression) is electronic or digital processing that automatically boosts quiet sounds and lowers loud sounds. (A related effect called “limiting” rolls off only the peaks and leaves the valleys alone.) Compression can be applied in different ways and to varying degrees, but at its most extreme, compression is used to make everything as loud as it can possibly be.

Here’s an example of commercial-style radio compression applied to perhaps the most famous dynamic contrast in the classical music literature. First we hear it raw, then compressed.

Yes, that sounds nasty. Yes, it robs the music of its essential character. But there’s a reason commercial radio does that. It makes for a very easy listening experience, with no knob-fiddling. A super-compressed station can sink into the unobtrusive background of a dentist’s office like wallpaper, and stay there about as long as the literal wallpaper. And that means ratings, my friends.

While many public radio stations apply some amount of overall compression to their broadcast signals and digital streams, it’s usually pretty subtle by commercial standards, and many barely compress at all.

I suspect public radio’s aversion to compression stems from the fact that most stations started out airing classical music, and many still do, at least in some dayparts. Popular music tends to be somewhat dynamically static, but expressive contrasts of loud and soft are as integral to classical music as pitch, timbre and rhythm.

Compression aesthetically warps classical music, and jazz to a lesser extent, much more than it does any other common type of radio content. Yet, ironically, classical music needs compression more to avoid any number of problems.

Julie Amacher, the silky-voiced program director of American Public Media’s Classical 24 service, knows this all too well. C24 is the 98-pound weakling on NPR’s chart of volume levels, shivering in the shadows of beefcakes like WNYC’s On the Media and Radiolab (insert joke about loud New Yorkers here).

“If we have a piece that has incredibly soft parts, what happens then is the sounds sensors go off, the [silence] alarms,” Amacher told me. “Then we’re getting engineers around the country who are having to run out to their transmitters to turn their transmitter back on the air.”

Amacher and her hosts often find themselves adjusting levels during those hushed moments, driving the faders to the max and hoping that something will come out the other end, even if it’s just the violists shuffling in their seats. You could call that a form of manual compression.

To be clear, Amacher is not aesthetically opposed to automatic levels processing. In fact, she’s counting on stations to do it.

“We don’t put any processing or anything on the music that comes to stations, because we want the stations to be able to create their own sound, if you will,” she said. “I mean, every station engineer has their own way of processing that audio.”

Amacher’s approach is the standard one, and it’s probably necessitated by the politics of network-station relations, though I fear that the faith on which it is based may be undeserved. In fact, I think the “someone downstream will fix it” attitude is the root problem behind public radio’s chronically wonky levels.

A lot of producers don’t fuss too much over their mixes, live or pre-produced, because they assume that somewhere along the radio pipeline a magic box is spitting out a perfectly standardized product. All too often, that is simply not the case.

Musicians make the best radio

Like many people who work on this Island of Misfit Toys we call public media, I’m a failed artist. I went to music school, studied classical composition and electronic music, and still spend an occasional weekend busting out electro-art pop or semi-ironic covers.

Serendipitously, my training has been amazingly applicable to my actual career. Mixing a radio story is child’s play compared to mixing a record, so it’s not surprising that the best- and most consistent-sounding radio is made by us musician refugees hiding out in broadcasting until someone ejects us like the imposters we are.

Take Radiolab, the most sonically advanced radio program in the history of the medium. Host/creator Jad Abumrad studied composition at Oberlin, and technical director Dylan Keefe spent 17 years playing bass in the alt-rock band Marcy Playground (yes, he’s one of the “Sex and Candy” guys).

WNYC

Keefe, technical director of Radiolab, says he now mixes and masters the show for the “uncontrolled environment” of various listening platforms.

The show’s ultra-professional sound results from the union of two habitual knob-tweakers. “I don’t think it’s an accident. I think we speak the same language,” Keefe told me via ISDN from WNYC’s studios, where he and Abumrad obsessively futz with Radiolab’s levels.

“Frankly, that’s what we spend most of our time working on, is how does Radiolab sound in comparison to itself as it goes from piece to piece,” he said.

This is probably a good place for me to offer a definition of “mixing.” Mixing is the art of making all the bits and pieces of an audio composition (musical, journalistic, whatever) sound right relative to each other.

“Jad is a very, very masterful mixer,” said Keefe (himself no slouch at the board). “[Abumrad] is somebody who really knows how to make music out of people talking.”

And that’s what it is — music. Balancing an actuality against a voice track is like balancing the brass section against the strings, and it takes a musical ear to get it right.

Volume ≠ loudness

Unfortunately, in the era of digital audio editing, too many people use their eye. Whereas the radio mixologists of yesteryear just sat at a board, moving the faders in response to what they heard over the monitors (and maybe an occasional glance at the meters), digital editing presents us with waveforms on a screen that too many people just grow and shrink until everything looks even.

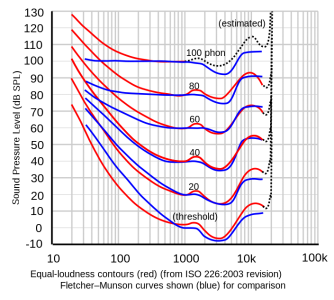

But the waveforms lie. We know this thanks to the Depression-era research of two scientists at Bell Labs — Harvey Fletcher and Wilden Munson. They gave us the Fletcher-Munson curve, known as the “equal loudness curve” in its updated forms, and it’s the most useful psychoacoustical concept you’ll ever learn about.

When I explain Fletcher-Munson to my students at Mercer University, I play them a low tone, say 100 Hz. The speakers in the classroom rumble almost inaudibly. Then I hit them with a piercing tone at 10,000 Hz, and watch as the upright nappers in the back row leap involuntarily, spilling coffee all over the notes they haven’t been taking. Good times.

“Which tone was louder?” I ask. “The second one, duh!” they reply.

Then I turn the laptop around to reveal two waveforms of identical height. The tones were of equal volume. Each vibration had the same acoustical power, sending the same quantity of air molecules rippling between the speaker cones and each student’s eardrums.

And yet, the second sound was WAY LOUDER. The lesson? Volume ≠ loudness.

Loudness is subjective; it’s the way we perceive the strength of a sound. But human hearing sensitivity is hugely uneven across the audible frequency spectrum. Fletcher and Munson did perceptual tests on people to find out exactly how uneven it is, and they came up with a series of curves, indicating the decibels it would take to make tones from the bottom to the top of our hearing range sound equally loud to an average person.

It turned out they didn’t get it exactly right, so the revised equal-loudness curves in this Wikipedia chart are shown in red.

It turned out they didn’t get it exactly right, so the revised equal-loudness curves in this Wikipedia chart are shown in red.

The moral of this story for radio producers is that looking at a waveform on the screen doesn’t tell you everything about how loud it is. Sounds with radically different frequency content — like your interviewee on the phone vs. your host in the studio — can look the same, while one sounds WAY LOUDER.

Of course, we all listen as we mix, and we think we’re clicking and dragging those fader automation lines in response to what we’re hearing. But in my experience, the brain averages out the conflicting input it gets from two sensory sources — our perception ends up half-wrong.

Further complicating matters is that fact that the Fletcher-Munson effect diminishes as overall volume increases. That’s why it’s technically the Fletcher-Munson curves; there are many of them. The louder you crank the volume on your speakers or headphones, the louder the bass will sound relative to the other frequency ranges — the curve flattens.

To demonstrate that concept for yourself, pull up a song that you usually only listen to while exercising (i.e., blasting it in your earbuds to stimulate your adrenal glands). Try listening to it at normal volume, and it’ll sound like a whole new mix. You’ll hear little parts you never noticed; some details will become clearer while the overall sound will be less full.

So here’s another lesson: Mix at the volume at which you think people are likely to listen. People listen to music at all kinds of volumes, but they tend to listen to talk radio at a moderate one. Sure, you can crank it up to get a real close listen, to make sure there’s no repeating door slam or dog bark to give away your lazy ambi loop, etc. But afterward, bring it down into comfortable listening range and adjust your levels accordingly.

And it’s not just frequency; duration alters the way we perceive sound as well. Without getting into another science lesson that I am hardly qualified to give, the ear is kind of like the eye in this sense.

Get up in the night to relieve yourself, turn on the bathroom light, and your eyes will burn until your pupils adjust. Then tiptoe to the couch and turn on the TV. The volume at which you were listening before bed will sound WAY LOUDER at first, until your auditory system adjusts. Same deal, basically.

The point of all this perceptual stuff is: Sound is complicated, so trust your ears. You’re never going to quantitatively factor in something like Fletcher-Munson.

Actually, you might, if Rob Byers gets his way.

Masters of the universe

Hold that last thought for a minute, and let’s define “mastering.”

Whereas mixing is the art of making the elements of an audio composition sound right relative to each other, mastering is the art of making the mixed piece sound right relative to all the other audio in the universe that may come before or after it in a playlist.

The problem illustrated by that NPR chart we began with — Snap Judgment being WAY LOUDER than This American Life — isn’t so much one of mixing. It’s mostly a mastering problem.

Nate Ryan / APM

Byers (right), APM’s technical coordinator, works on a mix with Performance Today Technical Directors Craig Thorson and Elizabeth Iverson. The show uses loudness technology in all production.

“All these individual shows are fairly consistent on a daily basis, it’s just they’re not consistent with each other,” said Byers, a whip-smart APM engineer who spoke to me from St. Paul.

I think he’s being a little generous — I regularly hear poorly-mixed elements within pieces or programs that make me hit the roof. But Byers, as a member of a cross-organizational working group that’s looking at ways to standardize levels on everything distributed through the Public Radio Satellite System, perhaps feels a need to be diplomatic.

“We know we don’t have a problem with production practices. We have a problem with transmission and delivery practices,” he said.

But good delivery starts with good mastering. To wit, here are two promos, both alike in dignity, pulled straight from ContentDepot on the same day. It’s Radiolab vs. Marketplace, WHO’S GOIN’ DOWN, BROTHER?!

Both promos are beautifully mixed, but Radiolab’s is vastly better-mastered. Yes, I just implied that louder is better, and to an extent, I think it’s true. Especially these days.

“In the changing media universe, you know, I’m actually mixing . . . and, at this point, mastering for the uncontrolled environment,” said Keefe, who mixes and masters Radiolab under Abumrad’s creative direction.

“Meaning, if someone puts [Radiolab] on their phone, their iPad, or whatever,” he said, “I cannot assume what was there before or what’s coming after. I can only assume that it’s more varied than the stuff that’s on a public radio station.”

Back in the day, public radio shows could get away with being quiet, as long as they were about as quiet as all the other shows coming over the satellite. You could assume that your show was going to be heard up against another public radio show.

In the podcasting era, a growing share of your audience doesn’t listen that way anymore.

“I can assume that they were just watching a movie on their phone, or that they were just listening to Fugazi, or they were just listening to a book on tape, a number of different things,” Keefe said.

If you want to know just how differently most public radio is mastered compared to popular music, check out this head-to-head. In one corner, it’s This American Life host/creator Ira Glass. In the other, we have “Chandelier” singer Sia.

A direct switch from Ira to Sia on a playlist would be neither smooth nor rare — this is the listening context of the future (and, to an extent, the present).

Of course, loudness is a pretty fraught topic in popular music. For those who are blissfully unaware, a “loudness war” has been raging for at least two decades now, with each artist trying to make sure their song sounds louder than the next one that plays before or after it.

Here’s the video I show my students to demonstrate this concept:

Like all wars, advances in technology have only increased the carnage. Digital multiband compression software can help you make sure that each region of the frequency spectrum is independently maxed out, all the time. Musical subtlety suffers the worst causalities.

Movies have gotten louder too, but since filmmakers like to leave themselves some room to grow for when the plot gets all ’splody, the average loudness is typically less than that of a pop song. I find that books on tape are, amazingly, even more inconsistent in their loudness than public radio shows. So how does Keefe master for a media environment of such random contrasts?

“I’m trying to play it down the middle,” he told me.

Using subtle bits of compression or limiting, but mostly just fastidiously massaging those fader lines, Keefe creates a sound that is even, full, and pretty loud. He still maxes out at -3 dBFS (3 decibels from 0, the highest possible amplitude), leaving radio stations the standard headroom they’ve come to expect that stops the sound from clipping if the pot is a hair north of 0, and also striking the happiest possible medium between maxed-out music and everything else.

This American Life could take a lesson here: You can still sound all hushed without being so damn quiet! Wouldn’t you rather stack up to Sia more like this?

I didn’t compress Glass into Top 40 territory. I just leveled off the most extreme peaks with a limiter and normalized to -3. Sia still blows him out of the water, but at least he puts up a fight.

Meet your new meter

Of course, we can’t all have a Dylan Keefe at the knobs, so what we need are standards, right? PRSS already has some, as Byers informed me (to my shock).

“Voice levels should be around -15 dBFS, and nothing should ever go higher than -3,” he said. I think that’s probably a little too quiet relative to the broader audio universe, but hey, it’s something.

However, in analyzing content across the system, the working group that Byers is a part of reached a totally un-shocking conclusion: “About half of the programing is out of spec in one way or another,” he said.

And frankly, even if shows were within spec, there would still be annoying variability, because (among other reasons) decibels are used to measure objective volume, not subjective loudness.

So how do we finally fix the problem, once and for all?

“No one is saying let’s strap a compressor or a limiter across everything that goes through PRSS,” Byers reassured me (and by that I mean, all of you). “I think the direction we’re going to head in is finding a new way to analyze the audio . . . that’s perception-based.”

That new and better way has already been the production standard in the European Broadcasting Union for four years now. And for almost two years in the U.S., it’s been the FCC’s enforcement standard for the CALM Act — the federal law that mandates TV commercials can no longer be so much louder than Matlock reruns that they shock Granny right out of her Barcalounger.

Ladies and gentlemen, I give you . . . [drumroll] . . . Loudness Units. “LU” to his friends.

Basically, LUs are decibels graded on a curve — the Fletcher-Munson curve. An engineer watching an LU meter is going to get a much more accurate picture of how loud a signal is than with the old dBs, because it takes human hearing sensitivity into account.

Those wanting more details should watch this amazingly accessible introductory lecture on the European loudness standard by the Austrian TV engineer Florian Camerer (h/t Byers).

A better loudness standard isn’t going to immediately result in the “audio nirvana” that Camerer envisions, or automatically make your programming smooth and in-spec. At least, not all programming.

“For live programming, it’s always going to be up to the content creator, I think . . . to deliver a consistent product,” Byers said.

Live board ops, meet your new North Star: -24 LUFS. That’s what Byers said is the new optimum average level. Set a steady course, and hold it until you’re over the horizon (i.e. forever).

But for shows that send preproduced content to PRSS, there is the possibility that files could be “loudness normalized” at the point of distribution before they’re beamed out to stations. Software could analyze each file to determine its average LU and automatically gain the whole thing up or down to meet an ideal spec.

This is in contrast to the standard “peak normalization” that most radio producers rely on, which only standardizes the file relative to its highest amplitude spike, and therefore usually doesn’t do too much for you. At least use a limiter, man!

We fear change

“I can hear hackles being raised right now all around the country, because we’re potentially saying, ‘We’re gonna touch your content,’” Byers said, adding (in the same tone of voice in which one would say, “Sir, put the gun and the baby down!”) that loudness normalization is not, repeat, not to be confused with compression.

But concerned parties need not argue over a hypothetical. Just listen to how it works on the popular (with audiences, not artists) music-streaming service Spotify.

“If you go into Spotify, and you go into your preferences, there’s a little tick box in your preferences where you can turn on or off loudness normalization,” Byers said.

With the normalization function on, compressed-to-the-max Black Eyed Peas songs will come down a bit in loudness, but most other stuff will come up, and everything will come out sounding more or less equal. iTunes has a similar function.

Musicians, like myself, are extremely excited about file-based loudness normalization, as it has the potential to be the carnation-in-the-muzzle of loudness warriors everywhere. I don’t have to be louder than you if we’re all going to be made equal in the end! Welcome to the new Workers’ Paradise, comrade!

The Takeaway (is a loud show)

Byers stressed to me that all this talk about a public radio loudness standard is only preliminary, at least as it pertains to his working group comprised of APM, NPR and PRSS delegates. “My hope is that we’ll pull in a few other content creators and have a larger discussion about what the impact would be for this across the public radio community,” he said. (Byers welcomes feedback via Twitter and email.)

In all likelihood, Byers said, they’re going to have to come up with different standards for music and talk programming.

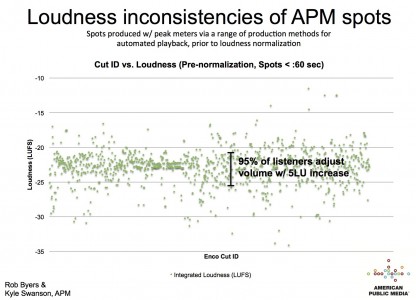

In the meantime, several provinces of the APM empire are already using loudness metering and normalization, including on the all the little promos and underwriters they feed. As you can see in this chart that Byers provided, these audio odds and sods produced by lots of different people using lots of different methods used to be, predictably, all over the map. Now, he said, the complaints about sudden, brief explosions or crashes in loudness have ceased.

Of course, remember that loudness normalization is a mastering step. Whether you do it yourself for your local station or the Internet, or let Spotify or PRSS do it for you, you still have to, you know, mix your stuff well (which I hope this article has given you a few pointers on how to do).

Of course, remember that loudness normalization is a mastering step. Whether you do it yourself for your local station or the Internet, or let Spotify or PRSS do it for you, you still have to, you know, mix your stuff well (which I hope this article has given you a few pointers on how to do).

Nothing I’ve written here is news to professional engineers, but most of the audio production in public radio isn’t done by professional engineers anymore.

There’s a new generation of content creators coming up. Its members (of which I am one) have done all their production themselves since day one on the job, using increasingly intuitive and powerful digital tools. For them, writing and mixing are part of the same integrated creative process, and that is resulting in tremendously exciting work that makes fuller use of the medium. At times, it’s also revealing their amateurism when it comes to audio engineering.

So, take it from me, the (failed) composer: When in doubt, take your eyes off the screen and listen. Then listen to your mix in a fast-moving car or while mowing the lawn. Then go back and fix everything you now know you did wrong. Repeat.

Adam Ragusea is the outgoing Macon bureau chief for Georgia Public Broadcasting. In August he will become Visiting Professor and Journalist in Residence at Mercer University’s Center for Collaborative Journalism.

I produce Snap Judgment. I have a great professional staff that mixes the show. They also produce music and live sound. We spend countless hours mixing with our ears, our meters, on speakers and in headphones. We aim for consistency throughout the course of the hour. We mix our show to -3db max. That level is music plus dialog. We are a sound-rich show that mixes hip-hop, electronica, jazz, foley, and sound effects all to create our storytelling experience. In our show, the story is king. That means the speaker must be heard. If you lose the speaker, you lose the plot. It is also our aim to create an immersive experience. We do take full advantage of the spectrum, but we still leave headroom for fluctuations in volume.

Let’s address the 6db change that people are calling the turnoff point. I try to make it so the entire show is balanced and consistent. That means when you turn on Snap Judgment, you set your level once, and you are good for an hour. If you play my show next to the Moth which is all dialog, then yes you should adjust the volume. But once. We try to make it so people can listen in their car, on their headphones, and while they are doing the dishes.

Finally, go look at the wave forms for produced music these days. The loudness wars have long existed in music, and the standards have definitely given way to wave forms that look like a solid block (that means they are loud as hell – compressed beyond recognition) All compression is not bad. Yes, we compress, but it is an art form. It’s done not just with meters, but our ears. We have meetings all the time about that level is too hot, or that song is distracting. We try to bring new and interesting music into our stories in a way that the

audience has really responded to. We have a good following around the

country and our sound is really working for us. We are very open to what people have to say about our show. We take feedback all the time. If you would like to reach us just send an email to stations at snap judgment dot org.

Peace,

Mark Ristich

Executive Producer

Snap Judgment

Hey Mark, am I reading a defensive tone in your comments here? Have people been giving you static about your levels? I think you guys are one of the few truly pro-sounding shops out there, along with RadioLab.

Hey Adam, I went back and gave it a once over. I think you wrote a good article. I just wanted people to know how much we care about this stuff at Snap.

Thanks,

M

Cool. Just in case it isn’t clear to anyone, my opinion (for what it’s worth), is that shows like Snap, OTM, and RL are right, and most others are too quiet.

Sorry I’m coming to this party so late…and I’m going to preface my comments with some background: when I listen to Snap Judgment, it’s usually in the car and my car is pretty noisy. It’s also usually on WELH 88.1 and our STL could stand to be a lot cleaner than it is. Also, we use a Broadcast Warehouse DSPX-FM-Mini-SE on the TALK2 preset. This is a “good” processor, but not a “great” one. Finally, I personally have trouble understanding people with accents unless and until I spend a few months interacting with them.

Mark, I think the levels are *consistent* in Snap, but frankly, I often have a VERY hard time listening to Snap Judgment. It’s not that I have to fiddle with my volume knob a lot, it’s that Glynn speaks so incredibly fast that he’s hard for me to understand under the best of circumstances, and the effect is exacerbated by the strong presence of music and sound effects throughout the show.

To my ears…and I want to emphasize that…part of the problem is that Glynn’s voice skews heavily towards bass. Or perhaps there’s some combination of vocal qualities that Glynn has that makes our processor skew his voice towards bass. And of course, more bass and less midrange = muddied-sounding audio.

I don’t know that this is really a “problem” for SJ, much less something you can “fix”. It’s more just one report from one listener on one station (although when I listened to SJ on K272DT 102.3FM in Santa Barbara CA…aka KCLU…back in 2011, I recall I had the same problems). Take it for what you will.

FWIW, other than the tonal issues, I do rather like Snap overall. The stories are great and the method of storytelling is entertaining as hell. :)

BTW, I should add that I also speak really, really fast. It’s something our pledge producers often yell at me during pledge drives when I’m pitching. I’ve gotten better about using deliberate slow-downs to emphasize a particular point I’m making, but I’m still not very good about keeping my default talking speed at something below Warp 8.

OTOH, a lot of New Englanders talk really damn fast, anyways, and we usually don’t have trouble understanding each other. Perhaps we’re all just a bunch of provincial hicks. :)

This just might be the best audio-for-radio piece I’ve ever read since my college textbooks back who knows when. I must admit, before digital hit I loved mixing to the little speaker in the Studer A807 reel-to-reel decks. It was a good reference in its day.

Thanks! You know, Keefe mentioned to me in our interview (audio at the top) that he often listens on cue speakers to hear how his mix will sound lo-fi.

I really appreciate this piece. Fresh Air is the WORST. My husband and I frequently listen to the podcast as we’re making dinner – wait, we USED to listen to the podcast. Between the sound of pots boiling, the stove fan, etc, I was constantly running over to the stereo to adjust the volume. So annoying that I now miss a lot of Fresh Air and listen, instead, to shows that I can hear over the sound of cooking. Sound Opinions comes to mind.

When I was mixing, I ALWAYS took the extra step of listening through the board monitor with my eyes closed, which I reckoned was the equivalent of listening to my crappy car radio (which I usually listen to with my eyes open, BTW). I think part of it was that I was one of the last people in the universe to cut tape as I was being trained.

This is really only a new issue because of podcasts. Stations can, do, and should manage their program levels to aim for whatever that station determines is their “standard level” That’s why we use an audio console and not an audio router.

Where it gets a bit more complicated is automated/ HD-2/3/12 stations where there’s often not a human being behind the feed, but again if a show is consistently 6db too high, just automate, and consider using a feature almost all systems have nowadays, an automix.

Sure, but this article is aimed at producers, not station engineers. I’m trying to help people create a product that’s idiot proof, will sound good anywhere, etc.

Totally, it’s just interesting because Fresh Air, for example, does have a professional engineer attached to their program. Mastering is always tricky, hopefully one day we’ll all settle on a smaller range.

Good article, I think this older piece from auphonic is also interesting on a similar topic.

https://auphonic.com/blog/2013/01/07/loudness-targets-mobile-audio-podcasts-radio-tv/

I think the point is good that higher audio levels may matter more with mobile listening.

Hi all. I’m really happy that this article is out there and is continuing the conversation! This feels like a solvable problem.

I want to clarify something that I think is muddying the waters a bit. The working group is focused on finding a solution to provide consistent levels in production and *delivery* to stations. The goal is to get distribution to a consistent place.

The results in consistency would then obviously carry over in to podcasts, streams, segmentation, etc.

So I don’t think you’ll see a spec or recommendation coming in relation to podcasts or streams as a result of this work – only distribution practices. But it will give content creators a consistent starting point to influence those other conversations.

Consistency is key!

Excellent article, Mr. Ragusea! and you’ve made a very good attempt at distinguishing the related but distinct concepts of level (energy level), loudness perception and dynamic range.

I find non-audio engineering trained content producers often confuse the idea of loudness management, which they should be enthusiastically embracing, with dynamic range compression, of which they should be justifiably wary (but certainly, judiciously, use).

To address Mr. Federa’s comment regarding automated stations – it’s easy to automate for level, but not for *loudness* – at least not in real time; Loudness preprocessing should work fine, but that’s not necessarily practical for all stations (since files have to be processed before transmission). This is why producers need to raise their consciousness regarding loudness, and produce to loudness targets while maintaining reasonable dynamic range.

And the loudness targets of broadcast and streaming may not be the same – so pre-processing at distribution points may be required.

I fear that Adam is advocating the idea that content should be mastered for the least-common-denominator of listening systems. Doesn’t it short-change the attentive listener with a nice, full-range stereo in a quiet room if we produce the content to be intelligible on a cell-phone speaker in a moving car? How do you master something to simultaneously be a compelling, immersive audio experience, and yet be easily ignored as audio wallpaper at the dentist’s office? Mastering too frequently targets a “typical” listening situation, which I argue doesn’t exist.

The cinema industry recognized this problem over 20 years ago and came up with the concepts of dialog normalization and decoding-side compression. We need a distribution standard, as Rob Byers advocates. But also, we need pressure on the playback product manufacturers and developers to put more of the level accommodation for end-listener environments into the playback side, and we need the tools on the encoding side to add our target level and compression hints data. That way, the highest quality program can be distributed everywhere, and the local playback system (or radio station automation) can reduce that quality as local conditions dictate.

Finally, even though, “…most of the audio production in public radio isn’t done by professional engineers anymore,” those doing the work still need to produce results as if they are. We need tools that let us LU-normalize our component clips before we mix. Such tools are expensive and hard to come by at the moment, though you can get part way there using RMS normalization if your clips are consistent internally. But if we demand LU-normalization tools and metering as features in our production environments, we’ll start to seem them as standard equipment.

By all means, let’s raise the average quality of engineering. Let’s establish and adhere to average loudness standards. But let’s not master our content to best suit the worst listening environments.

How do you master something to simultaneously be a compelling, immersive audio experience, and yet be easily ignored as audio wallpaper at the dentist’s office?

Honestly Steve, music producers have been doing just that for decades! They throw together a rough mix and listen to it on their expensive studio monitor set-ups, then they listen on headphones, then they listen to it in their cars, then they throw it on a mono shower radio, etc.

They figure it’s their job is to craft a mix that will sound good everywhere, just as it’s the job of a web developer to code a site such that it displays nicely on the latest, greatest version of Chrome as well as the crappy old version of IE that half of Americans have on their office computers and can’t update.

I mean, I sure like your fantasy of a world in which there’s a market for super hi-fi radio meant to be consumed blindfolded in an anechoic chamber. I would like to live in that world, but I don’t think it exists.

The closest thing we have to a show that demands/rewards that type of attentive listening is Radiolab. And yet, as I describe in my article, Radiolab is simultaneously the show that sounds best in a car on the interstate, because the mixes are so clear, consistent, and yes, LOUD.

I think one of the big reasons radio has remained so resilient through these years of media upheaval is that the technology involved in consuming it is stupid simple, cheap and ubiqitous. That includes both digital and terrestrial listening.

First, Adam, I need to say that I found your article well researched and well presented. The facts are all accurate and the argument is strong. I intend to use it as a reference for producers of modest technical background. However, you wrote…

“Honestly Steve, music producers have been doing just that for decades! ”

Except, they haven’t. I offer as evidence exhibit #1 your own example of the music loudness wars. Exhibit #2 is the (admittedly niche) increasing popularity of vintage vinyl recordings, partially because of the minimal dynamic processing. Exhibit #3 is Neil Young’s Pono. :-)

I mastered the podcast work I did in the previous decade as close to the top as possible, thinking exactly as you are in the closing of this article. However, then I got to see how the motion picture industry solved this problem, 20 years ago. And I see how they continue to solve it today in the face of wide ranging extremes of “3D surround” systems like Dolby Atmos, all of the way down to people watching movies on their tablets on airplanes. And they do this by distributing one, full-dynamic-range mix, and designing the players at the point of consumption to compensate.

I’ll grant you that we’re not going to get there overnight in the public radio world. But it’s a better long-term goal than limiting and compressing our way toward appeasing listeners in the worst of situations.

Thanks for your kind words Steve. Back atcha.

“It’s the very naive producer who works only on optimum systems,” or so said Brian Eno. And I could dig up a lot of similar quotes.

I think you are conflating the loudness war with what I’m talking about. Records haven’t been getting louder so that they’ll work on hi-fi and lo-fi systems. They’ve been getting louder because producers are locked in an arms race, enabled by advancing technology, to make sure their track doesn’t sound wimpy when heard adjacent to the next guy’s.

Vinyl records are indeed getting more popular, but only as a share of an overall recorded music market that’s shrinking rapidly. Also, I think you may overestimate the extent to which records are mastered differently for vinyl.

Pono is both a dream and a rip-off. Not even the most persnickety audiophile could tell the difference between a high bitrate mp3 and a wav. I hereby offer the fabulous prize of a date with your’s truly to anyone who can prove me wrong in a blind taste test.

Anyway, I’m hardly advocating for radio shows to be compressed into brick walls. I’m saying that we, as an industry, need to get a little louder and more even. That’s hardly controversial, right?

We use a “magic box” in our airchain for OTA FM and streaming. it’s called a Aphex Compellor. It’s been on the market for almost two decades. For the most part, it doesn’t corrupt the sound quality, yet it keeps audio within normal, listenable range. Sometimes I think folks might try to overthink things a bit too much. When there’s a appropriate fix available, it probably would just be best to go get it and use it, not trying to reinvent the “wheel” by getting a herd of cats to march in line, asking content providers to “watch their levels”. Streaming is such a huge part of public radio listenership these days. 1000 dollars fixes a lot of things including poor level control with a Compellor.

Ahh, the Compellor. Responsible for some of my favorite guitar sounds of 80s, notably Bad Religion’s “No Control” album.

In one of the two mentions, you say “dBSF” instead of “dBFS.”

Thanks, fixed.

Old news. . . . just now reaching npr. Dolby Labs indicated a problem with film soundtracks 20 years ago and developed a meter with which to grade films and trailers. I am not sure that this is still being used however. Has been used to check TV audio as well.

Indeed, as I discussed in the article, the LU standard is old and has been used in TV for awhile. It’s new to American public radio, which is what the article is about.

Just reading this linked from The Pub – super interesting for a techie geek and regular podcast listener. And the Loudness Units presentation video made my day. Thanks for sharing this stuff – some of us really do want to know how the sausage is made (and then squeezed into pieces that are then chopped up by local affiliates…). Thanks!

SPOCK: “Captain, the Intrepid. It just died. And the four hundred

Vulcans aboard, all dead.”

Come again?

Sorry, it was a rebuttal to your Jedi/reference early in the article.

Very good article, by the way. I used to work at a station where the GM would have me ‘re-edit’ a program we acquired from Southern California (which was admittedly, poorly produced) and adjust all the levels ‘up’ purely by visual reference of the wave forms. I would be editing and than loading the program segments into our automation moments before we aired the program -occasionally, I didn’t get a segment uploaded in time and a segment from the previous days’ show would air. After two weeks, I gave up and just started using the Audition Hard-Limiter and let others at the station think I was doing it by the prescribed methods.

The voice in the RadioLab sample sounds clipped ;(