To build and engage your audience, consider these core metrics for measuring success

i viewfinder / Shutterstock

Across public media, we all agree that digital platforms are important for reaching audiences where they are. But which ones matter most, and how do we leverage them most effectively?

Metrics can help answer questions about how to best attract and retain online audiences by providing data on what’s working and what’s not. But there are hundreds of digital metrics to choose from, and each digital platform has its own unique complexities. How do we know what to prioritize in this ocean of data, and then how do we measure our progress in ways that motivate our teams?

Before we tackle these questions, let’s switch gears: Imagine you manage a glass factory and you want to increase productivity. What do you do?

A few decades ago in the former Soviet Union, managers at one such factory decided that moving forward, everyone would be judged by how much glass (by weight) they produced every day. Makes sense, right? Choose a key metric that would encourage higher productivity across the board.

Unfortunately, their approach didn’t work. Workers started producing really thick glass that was too heavy. They met the goal but produced glass that wasn’t actually usable.

The managers caught on and decided to change the key metric to square meters of glass. Guess what happened? The workers started producing acres of very thin glass. Again, they optimized toward the goal as defined, but the glass they produced was too brittle and kept breaking. The factory’s productivity still didn’t improve. Oops.

So why are we talking about this Soviet glass factory? Because there’s a fundamental truth just beneath the surface of this story: What we decide to measure defines who we are and what we do.

As soon as someone in charge declares what the goal is and what metric we’re going to use to track our progress, everybody pivots toward that metric. People change, the work changes, the output changes. And if we don’t choose those metrics carefully, unintended consequences can occur – sometimes more dangerous than brittle glass.

Is public media like a Soviet glass factory? Aside from some folks calling us communists — no, of course not. There isn’t one person in charge of public media who decides what we measure. Our missions aren’t all about productivity. But the dangers are just as real for us.

Within each of our organizations, if we’re not careful about which metrics we choose to use, we could aim our teams in the wrong direction and get outcomes that don’t serve our audiences well. In addition, if we don’t have a common language across the entire system about our goals and how we measure success, we limit our opportunities to work together toward common objectives and share digital best practices that have measurable impact.

Thus, we come to the key question: Which measurements should we choose to drive our progress in digital service, where the platforms keep changing and proliferating?

This question first surfaced last March during a meeting of news station leaders that was organized by the Station Resource Group and the Wyncote Foundation in cooperation with NPR. (Current is also funded in part by a grant from the Wyncote Foundation.) The initial aim of this small conference was ambitious — “strengthening the digital news service within public media.” And after a day of discussion, the participants recognized we first had to tackle something fundamental: creating a common framework for measuring our success.

Since last April, a working group of 15 public media organizations has been tackling this very question. The group includes leaders from stations, networks and producers — from radio and television — who are focused on creating consensus and sharing best practices on what we measure, how we measure and how we use data to inform decision-making in our organizations.

Working group contributors include representatives from American Public Media; Greater Public; KCTS, Seattle; KPBS, San Diego; KPCC, Pasadena, Calif.; KQED, San Francisco; New Hampshire Public Radio; NPR; Oregon Public Broadcasting; PBS; PRX; St. Louis Public Radio; WAMU in Washington, D.C.; WBEZ, Chicago; WBUR, Boston; WGBH, Boston; WHYY, Philadelphia; WITF, Harrisburg, Pa.; and WNYC, New York City.

Our working group set out to identify a broad set of Key Performance Indicators (KPIs) that could be widely shared and adopted through all of public media. We wanted to:

-

- Identify the most important shared goals of our organizations. Across public media, our organizations have so much in common, beginning with our missions and goals. The working group identified the shared goals that are the best candidates for shared KPIs.

- Provide the most useful, intuitive and actionable metrics that indicate progress against those shared goals. While each organization measures different things, consensus emerged about which of the metrics that matter most line up with our shared goals.

- Begin a process that would encourage broad adoption of these KPIs as a common definition of success. Because these KPIs are foundational and tied to overarching organizational goals — not specific formats, content types or markets — they should be useful to every public media organization.

- Avoid “metrics creep.” There are an overwhelming number of metrics that a station could measure. The goal was to come up with a blueprint for measuring success that any station could adopt as its core indicators and add to depending on the specific situation.

What are KPIs?

Key Performance Indicators are measures and ratios that track the quality, efficiency and effectiveness of an organization’s operations over time. They emerge from collective efforts of managers who are setting goals to measure success.

The most useful KPIs are:

-

-

- Tied directly to specific, organization-wide goals

- Top down, cascading throughout the organization and useful for all teams

- Familiar and understandable by all

- Easy to remember and limited in number

-

KPIs aren’t everything you measure — they’re a select subset of the most important metrics that really drive your organization’s success.

The working group decided to focus on the digital platforms that seemed to offer the biggest opportunity for sharing best practices. We also chose to work on KPIs related to audience service. Many of us are drowning in audience data and don’t always know which metrics are the ones that really matter, yet audience service is central to public media’s overarching mission.

But before selecting measurement indices, we first needed a common language — a framework — for talking about all the objectives regarding audiences. And the framework we adopted was recommended by Tom Hjelm, chief digital officer of NPR, and is already being deployed within NPR:

GROW — KNOW — ENGAGE — MONETIZE

At the core, these are our common goals around audiences:

-

-

- Grow audiences across platforms so we increase our reach in our markets, distribute our content more widely and expand loyal audiences.

- Know our audiences so we can form and deepen individual relationships and encourage membership.

- Engage with our audiences so we encourage affinity, loyalty, membership and advocacy.

- Monetize our audiences so we drive membership and other forms of revenue.

-

The details within these goals might vary by organization, but the basic framework is relevant for everyone. It also proved to be a useful guide for our selection of individual metrics.

For the second phase, members of the working group shared their organizations’ current digital dashboards, which collectively tracked more than 100 different performance measurements. That set of 100-plus metrics became the master inventory from which we began our selection process.

The next stage of work was rich discussion about which of these metrics should be elevated to true KPIs that would be recommended to the entire system.

This selection process involved filtering, thinning and organizing the master inventory of metrics that were already flowing to almost every station in the working group through our large array of digital information systems.

Each proposed metric was evaluated on exactly what question it tries to answer, whether it is the best metric for doing so, and the pros and cons of selecting it as a KPI.

The result was a prodigious winnowing down to the list of recommended KPIs included here.

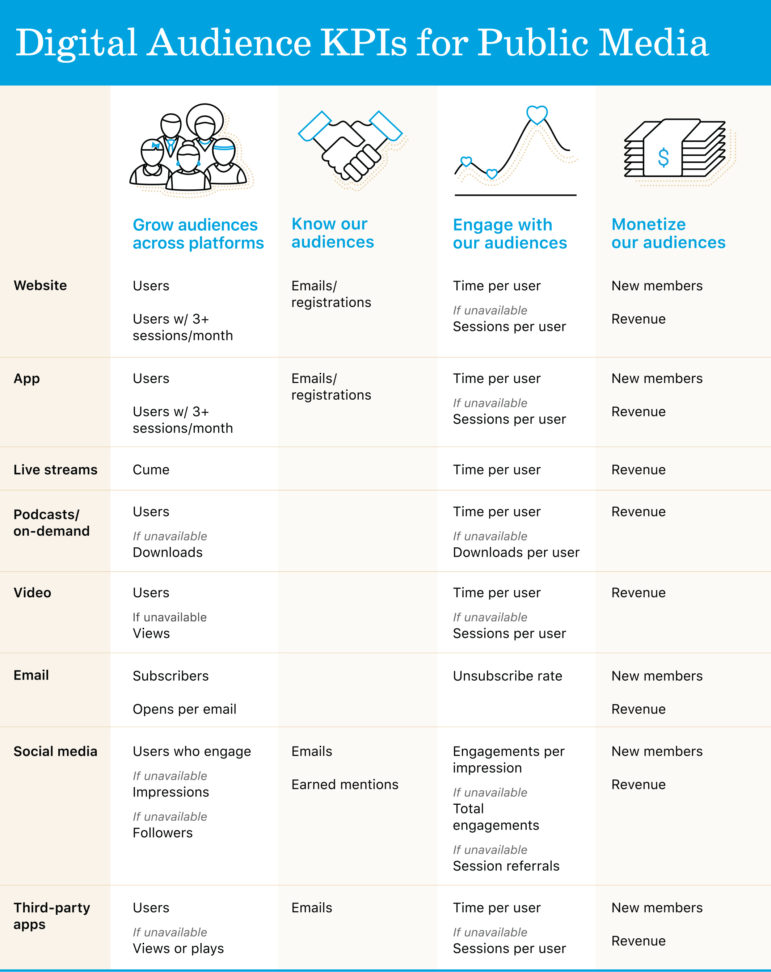

The resulting matrix pairs the Grow-Know-Engage-Monetize goals across the top with the individual digital platforms down the left. For each digital platform, there’s one (occasionally two) KPI that matters most. This is a foundational set of KPIs that every organization should be looking at, not a list of everything that should be measured.

For growing audience, the recommended KPIs emphasize reach by tracking how many people we touch with our content. But some reach is better than others. We know that regularly returning users who engage more deeply with us are more likely to become members and advocate for public media, so it’s increasingly important to track our loyal website users. To that end, the group recommends tracking users with three or more sessions per month as a website KPI. Similarly, we recommend focusing attention on the count of social media users who meaningfully engage with our content, not those who scroll by our posts.

For knowing our audience, nothing is more valuable that an email address that allows us to forge that individual relationship. For this reason, the number of new emails and registrations is a KPI many of us already track religiously.

For engaging with our audience, the working group believes that the best indicator is how much time the average person spends with us across a week or month. More time can translate into more membership support and advocacy for public media. When we are able to track time per user (e.g., minutes per month), that’s the best KPI. But for those platforms where we can’t accurately measure time on site — Google Analytics is not good at this, for example — another great KPI is how often the average person comes back or the sessions per user across a week or month. Engagement is a slippery term, so the working group wanted to focus on metrics that best capture meaningful behavior.

For monetizing, of course it’s all about counting new members and revenue that specific platforms drive, wherever possible. No surprise there!

Social media KPIs are complicated. The platforms are very different, and so are the metrics they offer. We can’t always get the ideal apples-to-apples numbers in measuring performance to grow and engage our audiences. So the working group created the following rules of thumb.

If you can measure users who actually engage with your content, use that KPI for tracking audience growth on that particular platform. If not, fall back to counting impressions as your KPI. Measurement of followers or subscribers is increasingly irrelevant to the number of people who are seeing or engaging with your content on social feeds. Impressions should be referenced only when other Grow metrics are impossible to ascertain.

This is just the beginning of this process, and these KPIs are baseline recommendations of important digital audience metrics that all public media organizations can adopt — not a comprehensive list of everything you should measure.

What might your organization do with all this?

-

-

- Use these KPIs as a starting point and make them your own. Gather key leaders in your organization, talk about which KPIs are most relevant, and add new ones where your high-level goals are different. Create a short master list of topline audience goals and KPIs that every person in your organization recognizes and understands.

- Create a weekly or monthly report on your KPIs so everyone can see progress on these goals. The more that people see quantifiable progress, the more they will internalize the key organizational goals they represent, and the more everyone will move in the same direction to tackle them.

- Set targets for the KPIs that reflect your current biggest priorities. Motivate your team with numbers you think they can hit for the KPIs that matter most.

-

We are what we measure, so what we choose to measure matters greatly. Think of these KPIs as an opportunity to revisit what you measure and why.

If you’re curious about a specific KPI or want advice on implementation, the working group has created a set of slides that includes an appendix with more details on each digital platform.

Steve Mulder is senior director of audience insights at NPR and works extensively with member stations on digital analytics. Mark Fuerst is director of the Public Media Futures Forums, a strategic planning project supported by the Wyncote Foundation, and is facilitator of this working group.

Organizations should use language in their internal planning that they wouldn’t mind their external constituents seeing, and I don’t think “monetize” passes the test. I don’t think most people feel great about being monetized. And it makes public service nonprofit media sound commercial. How about “Earn Audience Contributions” for that last stage?

That’s a great point and important feedback for the working group. Our intent is that all kinds of revenue can fit into that category, so a broad label is useful, but the word “monetize” can be a little…crass.

Thanks, Chris. Excellent observation and one that we should work on. That said–and I hope I can say this without sounding defensive–the concept behind that work is the more important element. Whatever word you hang on that fourth piece of the “Digital Framework” that Tom Hjelm brought to NPR, it’s been part of the philosophy, culture and operating model of public radio from its very earliest days. We want citizens to be so devoted to our service that they will voluntarily provide direct private support. And the process through which people first learn about public radio, then listen, and listen more (engage), until they convert to become members is, I think, what Tom Hjelm was looking to project into the digital landscape. The framework is initial discovery (grow), using digital data to gain insight into user interest (know)… which would lead to deeper engagement, and then, if we’re fortunate, a deep enough appreciation of our work, so visitors/listeners/viewers agree to support us. We may have chosen the wrong word. But, at least to me, the framework is right.