How PBS Utah is using AI to customize audience experiences

Mike Janssen, using DALL-E 3

Technologies such as generative AI and spatial computing are advancing quickly, with headlines about new developments surfacing on a weekly if not daily basis. But which trends will really matter for public media? And who is putting effort towards them? In “Thinking Beyond Screens,” a new blog series on the PBS Hub, innovators throughout public media are showcasing their work with emerging technology to inform and inspire their colleagues. Current is republishing posts from the series with permission from PBS. In this post, Natalie Benoy of PBS Utah shares how her station has been using generative AI and machine learning for several new initiatives.

Like the advance of civilization, innovation can happen in all kinds of ways. A new resource may be unveiled after years of meticulous, carefully planned work, while a groundbreaking new treatment may be discovered completely by accident. In my case, my innovation journey began in the spring of 2023 at a PBS Utah Development all-staff meeting, when I announced to the department, “Surprise — I built a recommendation engine!”

To be fair, no one had asked for a recommendation engine. It was something I had originally started working on as a side project, at a time when PBS’ own recommendation engine — powered by Amazon Personalize, and a clearly superior product — was about to go public. But it was a perfect example of what the then-newborn Data Team at PBS Utah had been created to do — to find ways to put the ever-increasing amounts of data at our disposal to productive, actionable use.

As PBS member stations, we are no strangers to data. From Nielsen ratings to the donations brought in by the most recent pledge drive, most of us are familiar with descriptive analytics — metrics that will tell us the results of a past campaign or initiative. Analyzing past performance is important, but recent advancements in artificial intelligence and machine learning have opened the floodgates of what is possible with the data available. Increasingly, data can be used to segment viewers based on their streaming habits, identify which sustainers are most likely to churn, provide recommendations, personalize our donor communications, predict whether a donor will respond to a given solicitation, and so much more. And the implementation might be easier than you think.

So far, PBS Utah’s experiments with AI and machine learning fall into three main case studies: the program recommendation engine, direct-mail renewal response modeling, and email personalization via customer segmentation.

The PBS Utah recommendation engine

The idea: The goal of the PBS Utah recommendation engine is to provide a service to our streaming viewers and Passport members by recommending shows similar to those they already know and love. For casual viewers, we hope they find the PBS app valuable and sooner or later convert to Passport donors. For existing Passport donors, we hope they continue to stream and therefore continue to donate.

How it works: Using our station’s Passport viewing data (aka the VPPA data), we employ a machine-learning method known as association rule learning (also commonly known as market basket analysis) to identify meaningful patterns in the behavior of our Passport viewers. Specifically, we look at which shows are frequently watched by the same people. If 80% of viewers who watched All Creatures Great and Small also watched Around the World in 80 Days, we might infer that people who like All Creatures tend to also like Around the World in 80 Days. The technical details are slightly more complicated, but that’s the general idea.

Once the association rules have been generated, we use the Media Manager API to retrieve the Passport start and end dates for each program and then filter our recommendations only to those programs that are still available to stream. The public-facing app itself is a web app built using R Shiny and embedded on the PBS Utah website as an iframe, but there are many other methods of building web apps out there using your preferred programming language (and they’d probably be faster, too).

Results and further considerations: The recommendation engine receives regular spikes in traffic from its inclusion in our monthly Passport email newsletters and brings in sporadic organic traffic via the website. UTM codes added to all outbound links from the web app to the station video portal indicated small amounts of referral traffic; unfortunately, these have not worked properly since the move to Google Analytics 4.

The recommendation engine has also shown its worth in email targeting. Let’s say you have a pledge email promoting Magpie Murders. Who should you send it to? You could send it to people who have pledged around Magpie Murders previously, but what about other fans of Masterpiece Mystery? With the recommendation engine, we can generate a list of shows most similar to Magpie Murders and send the pledge email to those people as well. This way, we can tailor our pledge emails without having to personally watch every pledge program ever made — which, aside from being my personal nightmare, is also inherently subjective.

Renewal response modeling

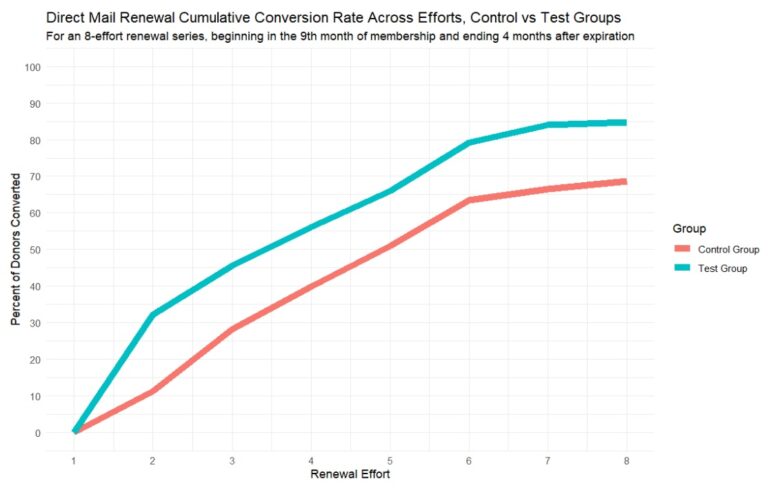

The idea: The goal of the renewal response model is to increase the conversion rate of PBS Utah’s direct-mail renewal program by using a machine-learning model to predict the optimal ask amount for each donor based on their donation history and other factors. By asking each donor to renew at an amount personalized to them, we aim to renew more donors earlier in their renewal series — therefore reducing the amount of mail we send — while simultaneously automating what would otherwise be a tedious and time-consuming process.

How it works: The renewal response model is a machine-learning model trained on past renewal campaigns that takes a donor’s prior giving history (the sum of Passport donations in the past year, prior years’ renewal amounts, the donor’s years on file, number of pledge premiums received in the past year, and so on) and other factors (such as the calendar month in which the renewal is sent) as its independent variables and uses those to predict the likelihood that a donor will respond to a range of theoretical ask amounts.

For example, a donor might have a predicted 50% chance of responding to a $40 ask amount, a 40% predicted chance of responding if we asked them to renew at $60, and a 20% predicted chance of responding at the $100 level. After filtering out any ask amounts with very low-level probabilities (which filters some donors out entirely; these donors will receive a renewal email only), the optimal ask amount is determined using the highest expected value — the ask amount multiplied by the predicted probability of response. In our example above, the optimal ask amount would be $60, as 40% multiplied by $60 gives an expected value of $24, the highest of the three options.

Results and further considerations: The renewal response model A/B test began in August 2023 and is still ongoing, but preliminary statistical testing suggests that donors in the test group convert at a higher rate than donors in the control group. A t-test of the renewal amounts also indicates no significant difference in the average amounts at which the two groups are renewing. In other words, the model gets more donors to renew earlier in their renewal cycle without reducing the donation level at which they renew. So far, the test group has managed to cut direct-mail spend by 18% while keeping both the number of renewals and the sum of total renewal revenue nearly constant in comparison to the control group. But testing is ongoing, and results can change — that’s why we test things!

Another potential implementation of response modeling is for additional gift solicitations. At PBS Utah, add gift campaigns are sent to nearly all donors, with generally lower response rates than renewals. With a machine-learning model to predict responses, we could choose to send and add gift mail only to the subset of donors most likely to respond to it, thereby reducing our direct-mail spend without reducing revenue. A recent test of this approach during Public Media Giving Days cut direct-mail spend by more than half with no negative impact on donation revenue.

Email personalization via customer segmentation

The idea: For marketers, true 1:1 communication between a business and its customers has long been the ideal. Customer segmentation may not get you all the way there, but it’s a great start. Our goal was to improve the relevance of our Passport email newsletters by using machine-learning techniques to segment Passport donors by their streaming habits and tailoring the email content accordingly.

How it works: Using the VPPA data, we experimented with three different machine-learning methods of Passport viewer segmentation — K-means clustering, latent class analysis and latent profile analysis. Each method used viewing genre as the level of aggregation (I wouldn’t recommend trying this at the show level). K-means clustering breaks up data into clusters of size K, where K is your number of choice. Asking for four clusters might give you the “drama or bust” people, the “team science & nature” people, the “history buffs” and the “we’ll watch literally anything” people. Latent class analysis and latent profile analysis return similar results but with a different set of assumptions and methodology. Once you have your customer segments, you can tailor your Passport emails to those particular groups’ interests.

Results and further considerations: This one’s on the roadmap for testing within the next couple of months, so there are no results to share yet. Further implementation could involve additional variables beyond just a viewer’s recent viewing genre(s) — you might find viewing habits also vary by age or gender (PBS Utah as a policy does not collect most demographic information on donors, but other stations may be able to experiment with it) or some other variable. Customers can be segmented in all kinds of ways, limited only by the data you may have available and the extent to which it may be relevant to each use case.

At PBS Utah, these and other machine-learning models have been created using the R programming language, but you don’t actually need to be a programmer to harness the benefits of AI and machine learning in your organizations. AI tools are increasingly being built into the software we use every day, often with no-code solutions and a more user-friendly interface. Take a look at your email platform or donor CRM and see if there are any new tools coming soon or ones you haven’t tried yet — or set your data architecture up for success while you’re at it (it’ll make things easier down the road). Or if you want to get more hands-on, Microsoft Excel’s Data Analysis Toolpak allows you to build predictive models using linear, logistic, and polynomial regression.

Even a model that isn’t perfect — and nobody is out here getting 100% accuracy, I’ll tell you that — can still be useful to your station’s goals. Whether there’s a process you want to optimize, an outcome you want to predict or a piece of messaging you want to personalize, AI and machine learning can help you get there (without the killer robots, we promise).

Natalie Benoy is a Business Systems & Data Analyst at PBS Utah. Her current responsibilities include data mining, predictive analytics, data visualization, and finding new ways to increase revenue and reduce costs — or both at the same time.