How public media can balance AI innovation and public trust

Mike Janssen, using DALL-E 3

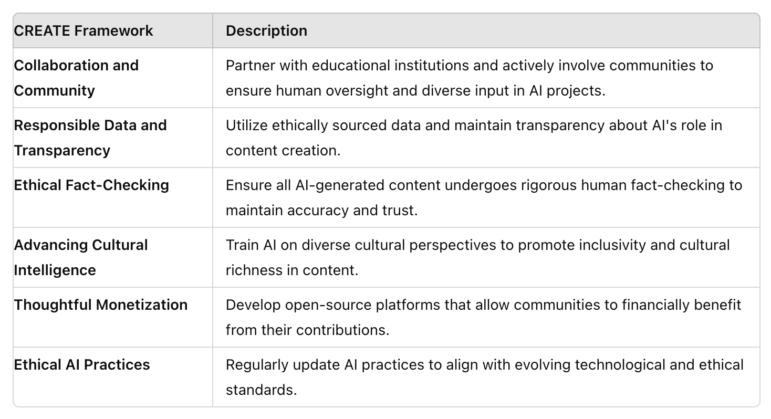

This is why a broader framework is necessary. The CREATE framework, detailed below, provides a structured approach to help public media organizations navigate the complexities of AI, ensuring that these technologies are harnessed responsibly and ethically.

AI systems can offer tremendous benefits but can also inherit and perpetuate biases if not carefully managed. For public media practitioners who are just beginning to use tools like ChatGPT, it’s essential to understand that while these off-the-shelf technologies can be powerful, they also require careful oversight. As you start integrating AI into your workflows, it’s crucial to have guidelines that reflect the ethical standards of your organization. The CREATE framework is designed to help you do just that, whether you’re using existing AI tools or developing proprietary ones.

Public media has long been a guardian of diverse voices and cultural representation. However, as AI becomes more integrated into content creation, there is a risk that these technologies could undermine these values if not carefully managed. The CREATE framework offers a proactive road map to ensure AI aligns with public media’s mission to serve the public good, safeguarding the trust that audiences have in public media organizations.

Yes, many of you will use off-the-shelf AI technology, and that’s great — these tools can be powerful when used responsibly. You’ll create guidelines to ensure these tools are used in ways that reflect public media’s core values. However, some of you will also be involved in developing proprietary AI tools tailored specifically to your needs. Whether you’re adopting existing technology or creating your own, it’s crucial that public media values — such as diversity, transparency and community service — remain at the core of your AI strategy. By doing so, we can harness the power of AI while staying true to the principles that have long guided public media.

This CREATE framework emphasizes collaboration between public media stations, educational institutions and communities, fostering an interdisciplinary approach that leverages diverse expertise to advance ethical and culturally intelligent AI practices.

- C – Collaboration, Human Oversight, and Community Engagement

- Prioritize Human Oversight: Ensure that all AI-generated content undergoes thorough human review before publication. Human oversight is crucial for verifying the accuracy of information and maintaining journalistic integrity.

- Facilitate Ongoing Collaboration Between AI and Humans: Foster a collaborative environment where AI tools are used to enhance, not replace, human creativity and judgment. Encourage content creators to leverage AI as a tool for innovation while maintaining their unique editorial voice.

- Collaborate and Adopt an Interdisciplinary Approach: Partner with educational institutions to integrate diverse perspectives and expertise into AI projects. For example, the collaboration between The Washington Post and Virginia Tech on AI is a great model for how media and academia can work together effectively.

- Engage Your Community: Involve your community in the AI process, ensuring that their voices are represented in the content creation and decision-making process. This strengthens trust and ensures that AI serves the community’s needs and values.

- R – Responsible Data Sourcing and Transparency

- Emphasize Ethical Data Sourcing: Use datasets that are ethically sourced and reflective of diverse cultures and communities. Engage with cultural experts to ensure that AI-generated content accurately represents the richness and diversity of human experiences.

- Maintain Transparency in AI Usage: Clearly disclose the use of generative AI in content creation to your audience, community and educational partners. This includes specifying which parts of the content were AI-generated and outlining the role of human editors in refining the final output.

- E – Ethical and Accurate Fact-Checking

- Enforce Rigorous Fact-Checking: Implement strict fact-checking protocols for all AI-generated content. AI tools should be seen as assistants rather than replacements for human judgment, especially in verifying factual accuracy.

- A – Advancing Cultural Intelligence and Inclusivity

- Promote Inclusivity and Cultural Intelligence: Ensure that AI tools are trained on datasets that encompass a wide range of cultural perspectives. Engage with cultural experts to enhance the AI’s ability to produce content that is inclusive, culturally informed and authentic.

- T – Thoughtful Community Engagement and Empowerment

- Facilitate Community-Driven Monetization: Create an open-source platform similar to Spotify’s early model, where culturally rich content contributed by the community can be shared and monetized. This platform would allow community members to benefit financially from their contributions while fostering a diverse and culturally vibrant content ecosystem.

- E – Ethical AI Practices and Sustainability

- Regularly Review and Update AI Practices: Continually assess and update AI practices to keep pace with technological advancements and evolving ethical standards. Engage with stakeholders, including audiences, technologists, cultural experts and educational institutions, to refine your approach to AI in public media and community settings.

Call to action: Engage, share and learn together

Public media organizations, educators and community leaders are encouraged to engage with the CREATE framework by sharing it internally, discussing it with your teams and taking the next six months to learn more about AI and its implications for your work and communities. This period of exploration and education is crucial for ensuring that you are well-informed and prepared to guide your organizations in making responsible, ethical decisions about AI.

Use this time to delve into the principles outlined in the CREATE framework, assess how they align with your current practices and consider how you can integrate these values into your AI strategies. By fostering dialogue within your teams and communities, you can help shape the future of AI in public media to be a force for good — ethical, inclusive and community-driven.

Context

We know that AI systems are often biased, and this bias can manifest in various ways, including in the realm of audio and music. At TulipAI, we researched major audio and music-generation tools and discovered that these systems often fail to accurately replicate or understand the cultural nuances of certain music genres, such as mariachi. This shortcoming arises because the training data used to develop these AI systems lacks sufficient representation of diverse musical traditions.

For public media stations, this issue is particularly important. Audio content plays a central role in storytelling, education and cultural representation. If AI tools used in public media are biased or lack cultural understanding, the content they produce can misrepresent or even erase important cultural elements. This can undermine the very mission of public media to serve and reflect the diverse communities it represents.

By focusing on advancing cultural intelligence within AI systems, public media can ensure that these tools enhance, rather than diminish, the richness of the content they produce. Many public media stations are connected to universities, offering a unique opportunity to drive this change. We encourage you to reach out to the computer science or entrepreneurial departments, as well as the social sciences departments, to cross-collaborate on creating a more inclusive and culturally intelligent AI future.

Understanding open source in AI

Open-source platforms like Hugging Face and Meta are leading the charge in making AI technologies accessible, transparent and community-driven. Open source means that the underlying code and models are freely available for anyone to use, modify and distribute.

This approach fosters innovation and collaboration, enabling a diverse range of contributors to enhance and refine AI tools. In the context of public media, open-source AI allows for greater inclusivity and cultural sensitivity, as it enables communities to participate in the development process, ensuring that AI technologies reflect their unique perspectives and needs. By embracing open-source principles, public media can harness the collective intelligence of a global community, creating AI-driven content that is not only ethical but also deeply resonant with diverse audiences.

Moreover, acknowledging the biases inherent in AI systems is a crucial step. Public media organizations must take an active role in making these systems more inclusive, ensuring that the AI tools they use or develop are free from the stereotypes and prejudices that can marginalize communities. Through collaboration, transparency and a commitment to cultural intelligence, public media can lead the way in creating AI that truly serves all people.

How to participate

We invite you to share your thoughts and feedback on this framework with Current. Your insights will contribute to the ongoing refinement of the CREATE framework, helping to ensure it becomes a cornerstone of ethical AI practices in public media. Together, over the next six months, let’s learn, collaborate and build a more informed and culturally intelligent AI ecosystem that truly serves the public interest.

Davar Ardalan is an AI and media specialist with a background that spans National Geographic, NPR News, The White House Presidential Innovation Fellowship Program, IVOW AI and TulipAI. She is offering an upcoming course about AI and audio that will cover how to leverage AI for content creation, historical reenactments and more while mastering techniques to enhance sound quality and produce multilingual, ethical and culturally rich audio. Ardalan is also preparing to transition out of TulipAI and joining Booz Allen Hamilton as an AI Strategist, Senior, in early September.

This content was crafted with the assistance of artificial intelligence, which contributed to structuring the narrative, ensuring grammatical accuracy, summarizing key points and enhancing the readability and coherence of the material.